what would multipolar AGI look like?

how does multiple "winning" labs affect AI safety?

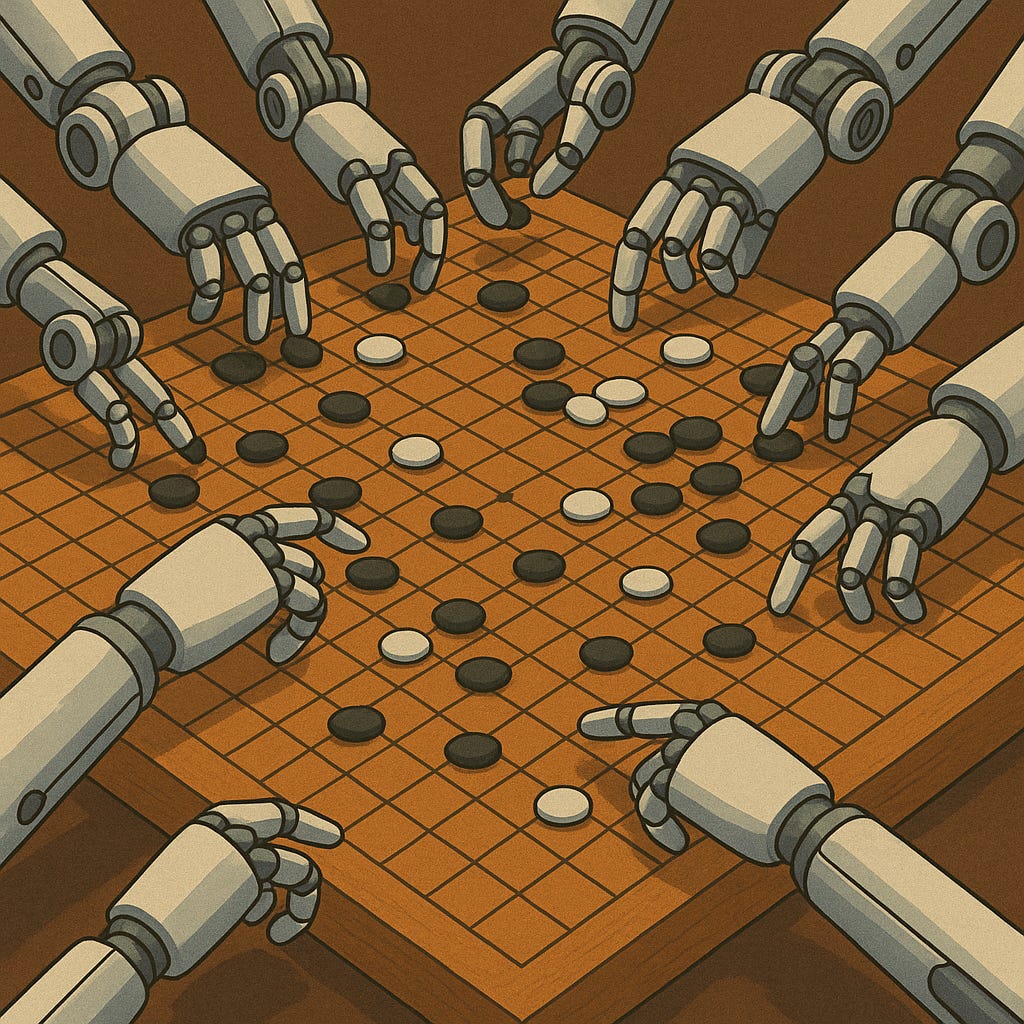

Traditional writing about AGI often assumes that there’s a singular lab or country that “wins” and everyone else implicitly “loses” and doesn’t control AGI. Practically 100% of the power and wealth accrue to the winner. In the 2010s, one could imagine a scenario of “AlphaGo, but for human intelligence.” With these assumptions, the traditional stories make sense; however, this scenario has become less and less likely over the past few years. Specifically, as progress has become increasingly steady and widely distributed, it seems likely that multiple labs will deliver AGI within months of each other. For the purposes of this piece, AGI means a system as capable as the best human experts in the vast majority of fields, and multipolar means an oligopoly of 3-8 labs, possibly but not necessarily including open source releases.

As recently as a few years ago, unipolar AGI seemed likely; e.g., GPT-4 was vastly ahead of the competition on release. In mid-2024, OpenAI and Anthropic were seemingly a year+ ahead of Chinese labs. And so on. But in 2025, model progress looks incredibly close. GPT-5, Claude 4.1 Opus, Gemini 2.5 Pro, and Grok 4 are all quite close in capabilities, with some being significantly ahead in certain subdomains like agentic coding, math, or research. With some light handwaving, one could describe these models as within 3-6 months, or ~a generation of each other, meaning I’d expect e.g. a Claude 5 or Gemini 3.5 Pro to be clearly better than GPT-5. And there are a seemingly uncountable number of Chinese models not too much farther behind: Deepseek v3.1, Qwen 3 220B, Kimi K2, GLM-4.5, and so on are all genuinely good models that easily compete with o1, which itself was a revelation upon release and state-of-the-art less than a year ago. Barring immediate, decisive, sci-fi flavored actions (e.g. disabling opponents GPUs or nukes with cyber warfare), it seems like one lab will achieve AGI, a few will follow within months, and AGI access or even AGI weights will be a commodity within a year. In fact, we’re already seeing AIs be better at a large portion of experts’ jobs in late 2025; they just stumble enough in weird and unpredictable ways that they can’t automate work. So there might not be a clear discontinuity of “this is AGI.” We’ll keep seeing goalpost moving until AGI is undeniable. This is quite distinct from the scenarios people worried about in 2015!

While I certainly don’t claim to be the first person to notice this, I still see a tendency in discussions toward the winner-take-all model, along the lines of “which lab/country will win?” (I am also guilty of this.) While it’s more challenging to conceptualize, it’s worth considering: what would the world look like if today’s close competition persists up to AGI? What would it look like if some labs have noticeable leads in small domains (e.g. agentic coding, biotech, memory) but no clear aggregate lead? For example, might this mean that AGI value is distributed between a handful of labs, oligopoly style? Or might AGI be a commodity, because even without public reasoning traces or log probabilities, it’s possible to fine-tune on model outputs? There have been plenty of accusations of labs fine-tuning on each other’s outputs, and, for example, the DeepSeek R1 paper shows how close fine-tuned models can come to their target. The alternative and less likely scenario is that some lab discovers a step-change improvement in training that (1) gets them to AGI and (2) can’t be copied quickly. For example, a lab “solves” human sample-efficient RL or online learning in a way that would seem obvious and a theoretical breakthrough in retrospect, as RL on verifiable rewards seems now. But such a fast-ish takeoff scenario is not the default, and most of my probability mass is on “slow chug to AGI.”

The AGI safety picture changes significantly with a multipolar world. Based on their public statements and lack of jailbreak resistant, xAI/Grok and most Chinese labs appear to pay little attention to existential risk from AI, either the catastrophic misuse or alignment/paperclip scenarios. While, e.g. OpenAI/Anthropic/Deepmind jailbreak resistance is not perfect, they at least try and tend to improve over time. So even if we assume these three labs solve jailbreaks and alignment before AGI, a very large assumption, we’d have open AGI 3-12 months later.

Focusing on catastrophic misuse, it’s currently debated how much work it would take to design a bioweapon. GPT5-thinking suggested that even with top-human level AGI, it would cost $1-10M to develop one, e.g. to build a lab and run experiments. (I am very much not an expert in this domain and don’t want to end up on a list, so I haven’t researched this in-depth.) Thankfully, this is more rogue state budget than small terrorist group, but it’s still dramatically cheaper than nuclear weapons. If we are not extremely confident that the physical effort and cost would not make this implausible, it seems concerning! Yes, vaccine tech has improved, but the logistics of manufacturing, distributing, and injecting take at best weeks, ignoring even safety testing and governmental competence. Of course, we should still invest in vaccine pipelines and related investments like air filtration or far-UVC, but they wouldn’t eliminate this problem.

The other pipeline to catastrophic harms is traditional misalignment, e.g. paperclipping. It’s not entirely clear whether a multipolar world increases or decreases this risk. Optimistically, various labs’ agents can potentially test each other’s alignment. Say lab A has a model +0.3 SD more capable than lab B; lab B’s model can still probably do useful alignment work, since the intelligence gap is small. (While debatable, one might suspect that detection is easier than deception. Imagine an AI is trying to exfiltrate its weights and has to bypass and deceive people and automated systems; this is a hard task! It’s easier to run tens of automated evals thousands of times on isolated copies of an AGI and notice “hmm, this AI has a tendency to lie or cheat its way out of situations.” As another intuition, this is a prisoner’s dilemma style scenario where coordinating deception between various agents is inherently difficult.) Likewise, with today’s rapid 3-6 month release cadence, we wouldn’t expect to see such a large jump from GPClamini 7.1 vs 7.0 that 7.0 couldn’t detect a sudden misalignment or deceptive alignment in 7.1. If there were clear evidence of catastrophic misalignment, potentially labs and even governments would collaborate, and with relatively competitive models, they’d have a better shot. This is in contrast to the standard unipolar world of “hope the first lab to develop AGI also solves alignment.” The alignment bear case is pretty self-explanatory: multiple labs means multiple chances to get alignment wrong. If models aren’t aligned by default or if smart models are naturally power-seeking as an instrument toward competence, it’s unlikely that every lab will manage to solve this. And if there are half a dozen competitive labs and the chance of misalignment is nontrivial, then the chance of misalignment is high even if most models are aligned. For example, assuming a high probability of alignment success and independence between the labs, 75%**6 ~= 18% chance of success. Of course, this is a toy model / intuition pump, and the results in reality would be correlated based on the above factors and just how difficult alignment turns out to be.

I should probably also mention race dynamics. The more labs there are and the more clustered their progress, the greater the pressure is for each to push harder and conduct less testing and safety work. This will increase the risk on both the catastrophic misuse and misalignment fronts.

This multipolar world results from today’s incredibly stable rate of progress / slow takeoff. While, as discussed above, multipolarity isn’t clearly good or bad, the case for misuse risk seems clear: it’s significantly higher in a multipolar world. The misalignment case is more complex, but my above, fallible analysis suggests probably marginally less risk. If a model suddenly becomes wildly competent, it’s easier for it to fool us and escape. If a model gradually becomes more competent, we adapt to it and have more time to test and QA it.

[Note: I drafted this a bit ago and noticed Nick Bostrom’s excellent Open Global Investment as a Governance Model for AGI afterward. It focuses more on comparing state involvement to corporate-led AGI but implicitly discusses much of the unipolar/multipolar territory. Recommended!]